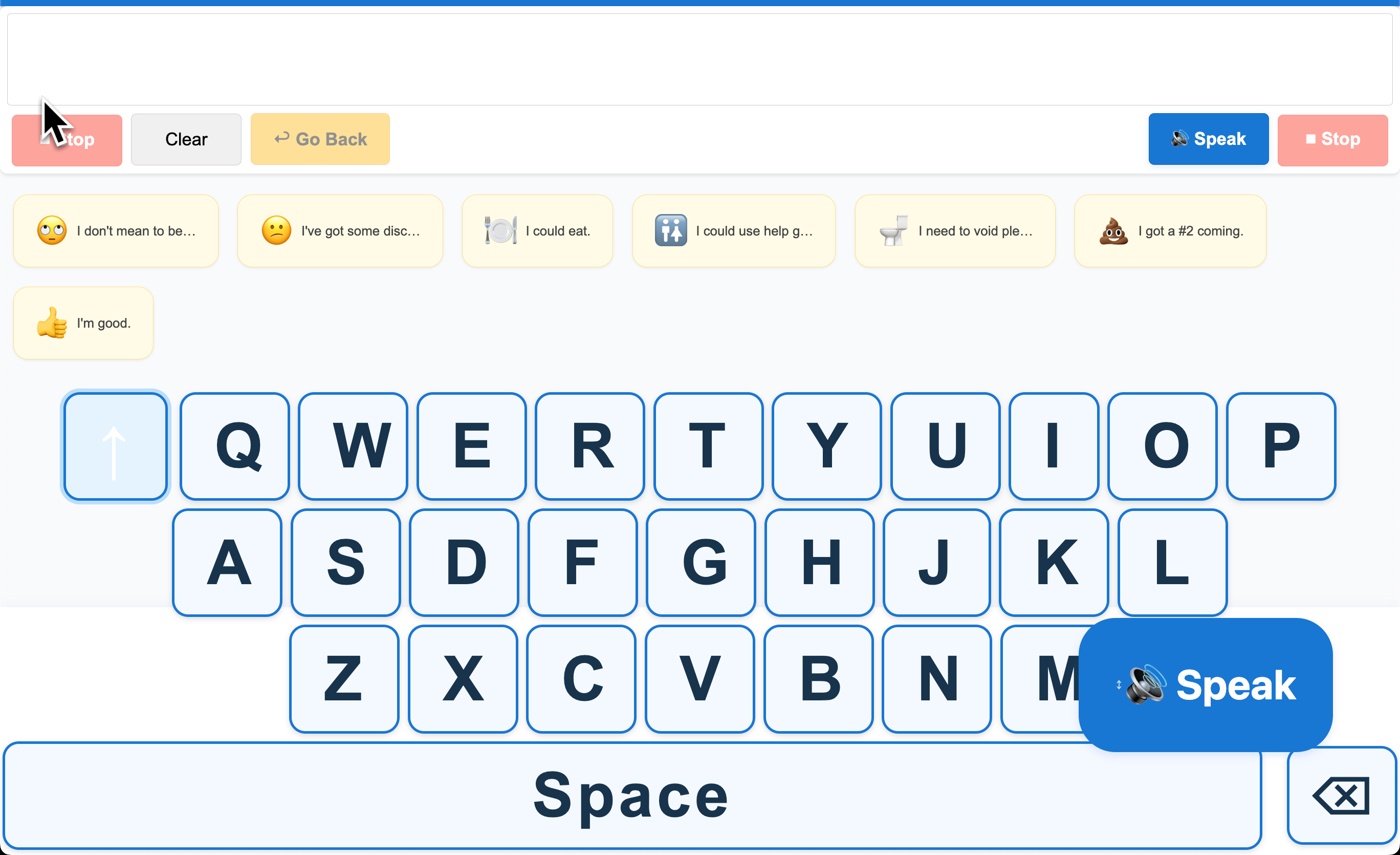

An open‑source augmentative and alternative communication (AAC) Progressive Web App that lets a user with motor impairment type sentences via large touch‑friendly keys and have them spoken aloud.

Project goal: deliver a base that works on any tablet with no install friction and can later grow to eye‑tracking and adaptive prediction.

- Improved communication – Allow users with significant motor impairment to more easily express their thoughts and needs.

- Edge-hosted – Cloudflare Pages provides low latency and wide availability at near zero cost.

- Incremental learning – keystrokes will later feed a per‑user language model to cut typing effort over time.

npm install

npm run devnpm run build

npm run preview- Sign up for a free Cloudflare account.

- Install Wrangler CLI:

npm install -g wrangler

- Configure your account:

- Replace

account_idinwrangler.tomlwith your Cloudflare account ID.

- Replace

- Build the app:

npm run build

- Deploy the static site:

wrangler pages deploy dist --project-name talker-mvp

- Deploy the API worker:

wrangler deploy worker/index.ts --name talker-mvp-api

- Visit your deployed site:

- You’ll get a public URL (e.g.,

https://talker-mvp.pages.dev). - Open this URL on any tablet browser.

- You’ll get a public URL (e.g.,

- No install required: Just open your site URL in Chrome, Safari, or Edge on any tablet.

- Add to Home Screen: Use the browser’s share/menu button and choose “Add to Home Screen” for a fullscreen, app-like experience.

- Works on iPad, Android, Windows tablets, and desktop browsers.

- Offline/PWA: The app is PWA-ready. For full offline support, see the PWA manifest and service worker options in

public/.

If this is your first commit:

git init

git add .

git commit -m "Initial working Talker MVP"| Path | Purpose |

|---|---|

public/ |

Static assets + PWA manifest |

src/ |

React TypeScript front‑end |

worker/ |

Cloudflare Worker (stubbed predictions) |

wrangler.toml |

Pages + Worker config |

tsconfig.json |

TS settings |

vite.config.ts |

Dev/build config |

The Talker MVP provides a solid foundation for AAC communication. Here's a strategic UI/UX roadmap to enhance user experience for individuals with motor impairments:

- Improved Visual Contrast: Implement high-contrast themes and customizable color schemes to accommodate various visual needs

- Touch Optimization: Fine-tune touch target sizes and spacing based on user testing with target population

- Haptic Feedback: Add subtle vibration confirmation for key presses where device-supported

- Focus States: Enhance keyboard navigation with more visible focus indicators

- User Profiles: Allow saving preferences, custom phrases, and personal dictionaries

- Layout Customization: Enable repositioning of UI elements based on individual motor abilities

- Voice Selection: Add interface for choosing preferred voice, speech rate, and pitch

- Theme Options: Provide night mode and other visual themes for different environments

- Gesture Support: Implement simple swipe gestures for common actions (backspace, space, clear)

- Context Menus: Add long-press options for additional functionality

- Undo/Redo: Expand the current history feature with multiple levels of undo

- Custom Phrases: Allow users to create, edit and categorize their own phrase buttons

- Save & Load Text: Enable saving and editing typed text as files for longer composition

- User Feedback: Add direct feedback form in settings to create GitHub issues for developers

- Context-Aware Suggestions: Improve predictions by considering sentence context

- Visual Prominence: Make prediction UI more noticeable with better visual hierarchy

- Adaptive Learning: Accelerate the personalized prediction system to learn from user patterns

- Category-Based Predictions: Offer contextual predictions based on conversation topics

- Phrase management – build system for users to save, edit and customize phrases.

- Feedback integration – implement feedback form in settings that generates GitHub issues.

- Prediction engine – compile Presage to WebAssembly and call it from the Worker.

- Data logging – add

/logendpoint and nightly KV aggregation. - Eye tracking – integrate ocular motion detection using standard hardware for improved accessibility.

- Gaze-based typing – increase typing speed based on eye gaze detection of letters.

Copyright (c) 2025 Sum1Solutions, LLC

This license uses MIT License terms with an explicit equity stake condition for public companies. It is designed to:

- Promote Open Innovation: The software is free to use for personal, non-profit, and private commercial projects.

- Kind Capitalism Projection: Companies that are, or become, publicly traded while using this software or any work derived from it, must grant Sum1Solutions, LLC an equity stake 1% of total outstanding shares as of the date of the public listing or acquisition. This equity stake is open to negotiation and would likely be shared with other human contributors to the project in a comensurate manner. This equity stake is non-transferable and non-revocable.