Harrish Thasarathan, Julian Forsyth, Thomas Fel, Matthew Kowal, Konstantinos G. Derpanis

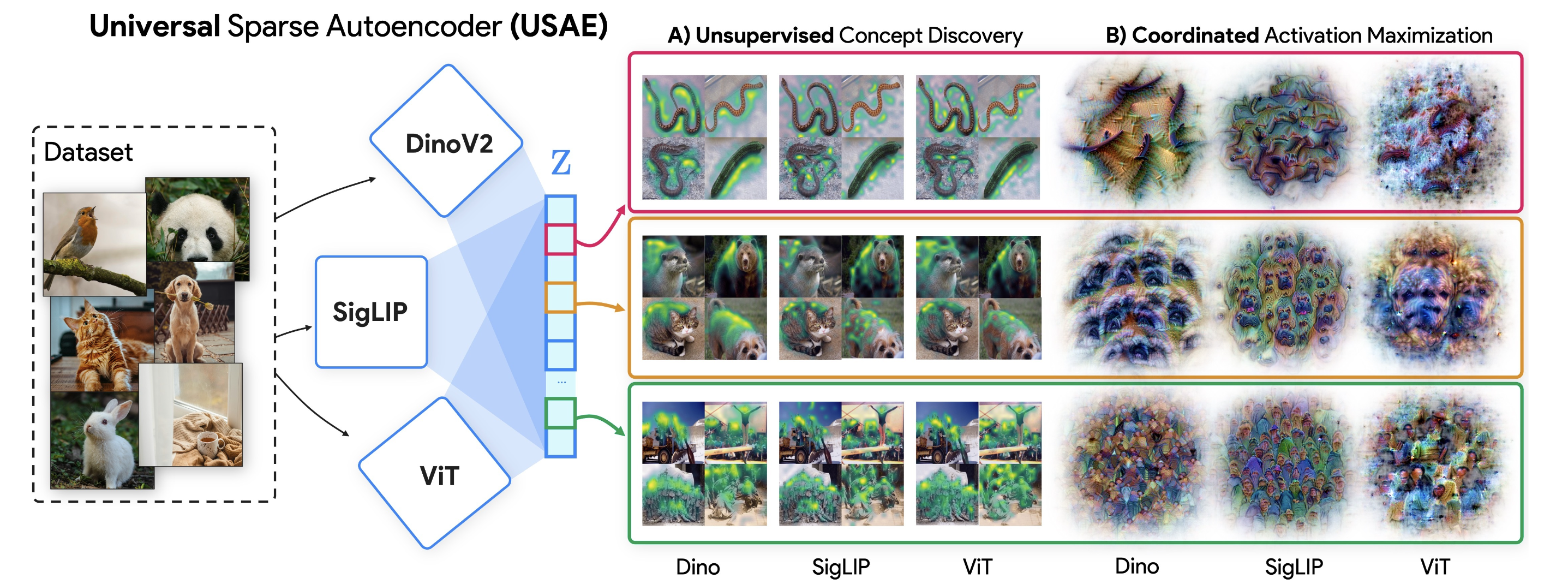

Universal Sparse Autoencoders (USAEs) create a universal, interpretable concept space that reveals what multiple vision models learn in common about the visual world.

See setup instructions for Overcomplete. Python 3.8 or later is required.

Run uni_demo.py to set up and train a USAE: python -m uni_demo

- USAE training framework

- Evaluation Notebook to validate metrics of trained USAE

To replicate the model used for our paper, see config.yaml file for hyperparameters. The training script assumes activations are in specified form (see uni_demo.py for details). Our dataset/loader is customized for Imagenet and assumes activations have been cached to .npz form.

During training, each batch is expected to be in the form:

- Model Activations X and labels Y in tuple ( X{Dict: (models s_1...s_k)}, Y) where each value from model key s_i is in form N(batch size) x d_i(activation dimension)

The Activation Dataset/Loader is customized for training on Imagenet:

- requires model activations to be cached

- assumes activation directory follows format of Imagenet directory

- recommended to store all models' activations for each image in one npz file (hence 'combined_npz' param)

- see models.py for model activation details

To cache model activations from Imagenet, see scripts in the caching_acts folder.

Download the checkpoints from the following link:

- ICML USAE Model Checkpoints

- Place each *.pth file in the checkpoints folder

- Modify config path in example_visualization.py to checkpoints/config.yaml and launch the script

If you use this work, please cite:

@inproceedings{

thasarathan2025universal,

title={Universal Sparse Autoencoders: Interpretable Cross-Model Concept Alignment},

author={Harrish Thasarathan and Julian Forsyth and Thomas Fel and Matthew Kowal and Konstantinos G. Derpanis},

booktitle={Forty-second International Conference on Machine Learning},

year={2025},

url={https://openreview.net/forum?id=UoaxRN88oR}

}This project was built on top of Thomas Fel's Overcomplete library.