This repository provides a dockerized version of VINS-Mono, a robust and versatile monocular visual-inertial state estimator. This specific version has been packaged to operate as a passive SLAM component within the CRL SLAM Benchmarking (slam-bench) framework.

Instead of processing files directly, this container listens for sensor data on ROS topics and publishes its estimated trajectory, which is then captured and evaluated by the slam-bench system.

Authors: Tong Qin, Peiliang Li, Zhenfei Yang, and Shaojie Shen from the HKUST Aerial Robotics Group

HKUST VINS-Fusion GNU General Public License v3.0

These instructions are the primary method for building and running this project for the SLAM competition.

- Docker Engine

- (Recommended) Follow Docker's post-installation steps for Linux to manage Docker as a non-root user.

Ensure you are on the correct branch for the competition.

git clone https://github.com/comrob/VINS-Mono-crl.git

cd VINS-Mono-crlUse the provided Makefile to build the container. This process will set up the ROS environment, install dependencies, and compile the VINS-Mono source code.

cd docker

make buildThe resulting Docker image will be created, ready to be used by the slam-bench evaluation framework. The image is designed to run the SLAM algorithm using the passive sensor topics provided during the competition.

VINS-Mono is a real-time SLAM framework for Monocular Visual-Inertial Systems. It uses an optimization-based sliding window formulation to provide high-accuracy visual-inertial odometry.

- Efficient State Estimation: Features IMU pre-integration with bias correction, automatic estimator initialization, and online extrinsic calibration.

- Robustness: Includes failure detection and recovery mechanisms.

- Large-Scale Mapping: Supports loop detection, global pose graph optimization, map merging, and pose graph reuse.

- Advanced Camera Support: Online temporal calibration and support for rolling shutter cameras.

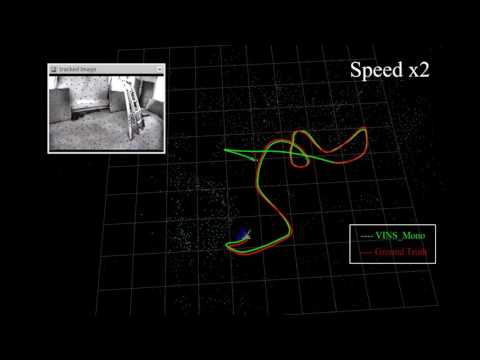

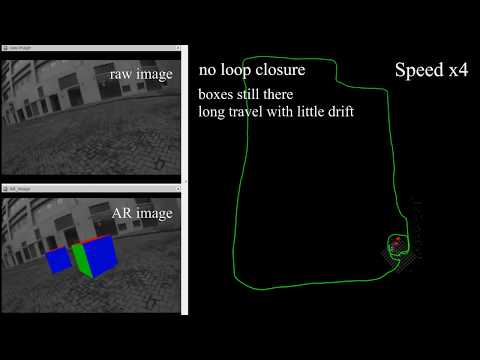

(Left to Right): EuRoC dataset performance; Indoor and outdoor flight; AR application.

If you use VINS-Mono for your academic research, please cite the following papers.

- VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator, Tong Qin, Peiliang Li, Zhenfei Yang, Shaojie Shen, IEEE Transactions on Robotics (PDF)

- Online Temporal Calibration for Monocular Visual-Inertial Systems, Tong Qin, Shaojie Shen, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS, 2018), Best Student Paper Award (PDF)

A BibTeX citation file can be found here.

For users who wish to build and run the project natively on a host machine.

- Ubuntu 16.04 and ROS Kinetic.

- Ceres Solver: Follow the official Ceres Installation guide and remember to

make install. - Additional ROS Packages:

sudo apt-get install ros-kinetic-cv-bridge ros-kinetic-tf ros-kinetic-message-filters ros-kinetic-image-transport

Clone the repository into your Catkin workspace and build:

cd ~/catkin_ws/src

git clone https://github.com/HKUST-Aerial-Robotics/VINS-Mono.git

cd ../

catkin_make

source ~/catkin_ws/devel/setup.bashYou can test the system with datasets like EuRoC. For example, to run with the MH_01_easy.bag:

- Launch the estimator:

roslaunch vins_estimator euroc.launch

- Launch RViz:

roslaunch vins_estimator vins_rviz.launch

- Play the dataset:

rosbag play /path/to/your/dataset/MH_01_easy.bag

The VINS-Mono source code is released under the GPLv3 license.

This work utilizes Ceres Solver for non-linear optimization and DBoW2 for loop detection.